With ChatGPT at the latest, artificial intelligence (AI) has become the topic of our time – also in the field of software medical devices.

Many manufacturers are unsure about the extent to which AI can be used in medical devices at all. For this reason, in this article we want to look at the use of artificial intelligence, in particular machine learning (ML), in regulated medical devices.

In this guide, we focus on the implications of the Medical Device Regulation (MDR) for AI-based medical devices.

We want to clarify the following questions, among others:

- Is a medical device even allowed to contain AI? Do you even get an approval for this?

- Is an AI medical device allowed to continue learning during use in live operation?

- Which special laws and ISO/IEC standards must be complied with?

- What does the notified body want to see in relation to AI medical devices?

- What do you need to consider during the development of artificial intelligence as a medical device?

- Can you use LLMs like ChatGPT in your medical device according to MDR?

The first question that arises here is:

What makes AI so special in a regulatory sense?

Why should one expect any extra treatment at all in terms of legislation?

Content of this article

- 1. Regulatory peculiarities of artificial intelligence (AI)

- 2. MDR approval: medical devices with artificial intelligence

- 2.1 Requirements for the development of AI medical devices

- 2.2 What does the notified body expect in relation to AI-based medical devices?

- 2.3 Relevant standards and guidelines for artificial intelligence in medical devices

- 2.4 Which machine learning models can be certified as medical devices?

- 2.5 Can you use LLMs such as ChatGPT in your medical device according to MDR?

- 2.6 Costs and effort for approval

- 3. Additional regulatory requirements for AI

- 4. Conclusion

1. Regulatory peculiarities of artificial intelligence (AI)

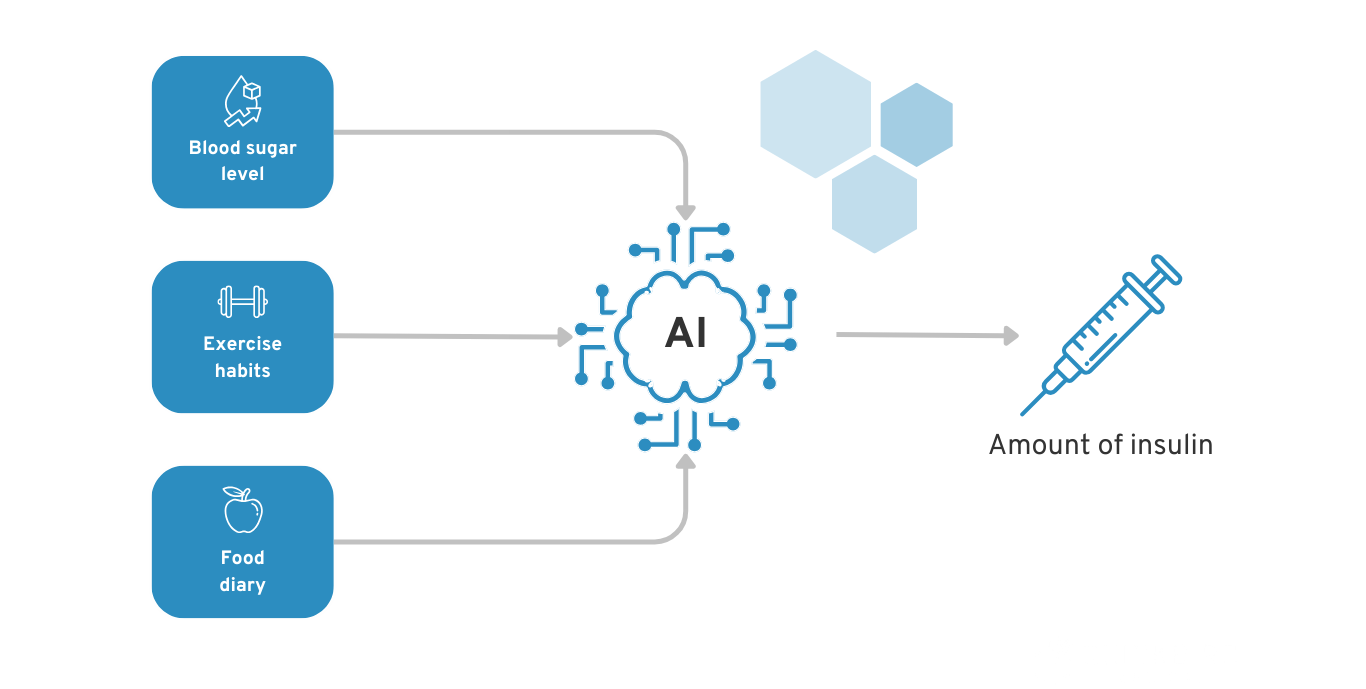

To understand the regulatory peculiarities of AI, let’s take a look at the following example:

We have a diabetes app that uses patient data to recommend when and how much insulin the diabetes patient should inject. Data such as blood sugar levels, the food diary and exercise habits are taken into account.

Example of AI for diabetes patients to recommend insulin dosage

Does it make a difference whether an AI calculates this recommendation or, for example, a rule-based decision tree?

Unfortunately, the answer is “yes and no”. In theory, the legal basis of the Medical Device Regulation (MDR) is identical for classic software and AI-based software.

In practice, you can expect regulatory challenges with AI-based software due to the technical nature of AI. The most important features of AI that play a major role in regulatory terms are as follows:

1.1 Interpretability

An AI is usually a “black box” for humans. Even for the developer of the AI, it can be difficult to understand how exactly the artificial intelligence has calculated certain outputs from the input data.

Decisions made by artificial intelligence are often incomprehensible to humans

Decisions made by artificial intelligence are often incomprehensible to humans

In our diabetes AI scenario, for example, it is not yet clear how exactly the diabetes AI calculates the specific amount of insulin and the optimal injection time. This makes it difficult for the AI developer to say whether the system really works properly in all situations. This of course carries high risks for the diabetes patient, who could suffer physical damage and even die if they underdose or overdose on insulin.

A simple decision tree or linear regression models, for example, are transparent for people to understand. Accordingly, with the necessary medical expertise, it is much easier to validate whether the system is working properly.

You could say that the developer of conventional software already knows the basis on which decisions will be made before development begins. AI, on the other hand, only develops this decision model on the basis of the data it is fed and is not necessarily able to explain this model to the developer, doctor or patient afterwards.

Regulatory challenge: With medical device software according to MDR, you must validate that your system works and that there are no unacceptable residual risks. From a regulatory perspective, this is not so easy without a deep understanding of the software system. You should therefore use findings and methods from the areas of transparency, explainability and interpretability of AI systems to approve your AI system.

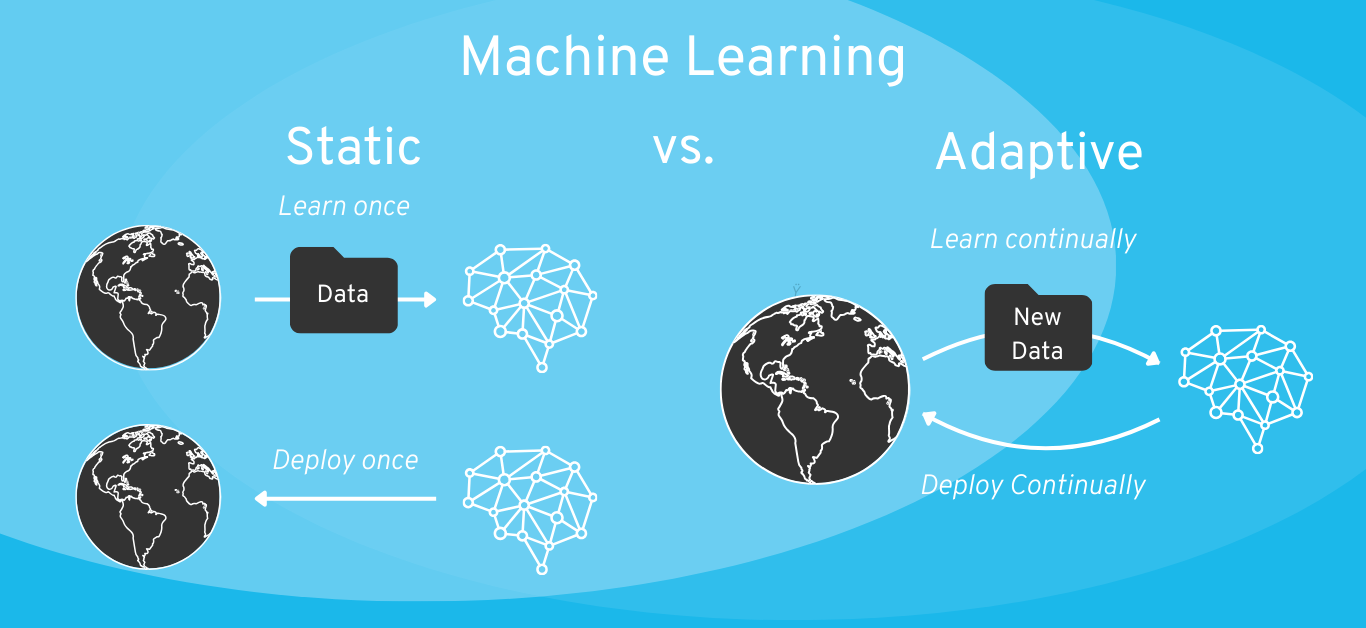

1.2 Continuous learning

AI, and machine learning in particular, is a new development that also brings regulatory challenges with it: systems that continue to learn independently and continuously. Some AI systems are also continuously trained while the product is in use and thus change their own behavior in live operation.

However, the continuous learning of the AI takes place outside the developer’s direct sphere of influence. This makes it all the more difficult to predict whether the AI will continue to function properly in the future.

Example: In 2016, a Twitter bot released by Microsoft was able to automatically generate tweets. It was actively learning on the basis of new data. However, due to the deliberate manipulation of the community, the Twitter bot unfortunately made many racist statements at some point. A good example of how self-learning systems are more difficult to control.

For comparison, there is of course also the option of only initially training AI systems with data during development and “freezing” them after integration into the product (also known as “static” AI). As we explain later in the article, this should be the preferred option for most software medical devices in terms of regulatory approval.

Regulatory challenge: Self-learning AI systems change their own behavior even after initial regulatory approval. This change in behavior is usually based on non-validated data provided by users. This brings with it regulatory challenges for validation and change management in the context of the MDR medical device.

1.3 Dependence on data

Data is a key resource for an AI medical device. While conventional software usually works on the basis of predefined rules, a machine learning algorithm derives these rules from the data with which it is trained.

If you want to develop a new type of AI model, you will therefore first need data. If you do not have access to reliable medical data, you may have to laboriously generate it yourself within a clinical trial.

You do not need training data for pre-trained models (such as GPT-4 from ChatGPT). Unfortunately, these are currently rarely reliable enough for medical applications due to so-called “hallucinations”.

Regulatory challenge: To approve a reliable AI model, you need access to valid medical data. Obtaining this data can be costly and time-consuming.

In the next chapter, we will look at how you should take these special features of artificial intelligence (AI) into account when approving your medical device in accordance with the MDR.

Note: For the sake of simplicity, this article will deal with AI in general. Overall, however, this includes various subcategories such as Generative AI, Large Language Models (LLM) and Machine Learning (ML). These terms are also used as examples in some places.

2. MDR approval: medical devices with artificial intelligence

There are currently (still) no separate requirements for software medical devices with AI components (as of January 2024). In the MDR, you will search in vain for terms such as AI or machine learning.

From a regulatory perspective, the most important standards for software-based medical devices continue to be, among others:

- ISO 13485: Quality management system (to the ISO 13485 guide)

- IEC 62304: Software lifecycle processes (to the IEC 62304 guide)

- IEC 82304: Requirements for health software

- IEC 62366-1: Usability engineering

- ISO 14971: Risk management

More information on the development of software medical devices (with or without artificial intelligence) can be found here.

There is currently no established (let alone harmonized) standard specifically for the development of AI-based medical devices. Artificial intelligence is currently regulated in the same way as traditional software products in terms of the MDR and the above-mentioned standards.

However, it is not quite that simple:

They adhere to the same standards and laws as classic medical device software. However, as with any other medical device, they must of course also meet the following requirements, among others:

- You must have the safety of the medical device under control: What is the risk of someone being harmed by using your product? How can you minimize this risk? Is the residual risk acceptable? And so on.

- You must be able to ensure and prove the performance of your medical device: Can your product really deliver the intended performance? Can your software really be used to diagnose diseases, for example, or is your statement just based on gut feeling? With what accuracy and error rate can a disease be diagnosed? And so on.

With artificial intelligence, the above-mentioned questions about security and performance are usually much more difficult to answer than with software with transparent, rule-based algorithms. This is mainly due to the special features of artificial intelligence mentioned in the previous chapter. AI is initially a “black box”, and its safety and performance are apparently difficult to assess.

How do you know that your artificial intelligence will work properly in every application context?

Example: You develop an app to diagnose skin cancer using the camera on your smartphone. Let’s assume that the training data for your AI is from Germany and therefore may contain more images of people with lighter skin tones than of people with darker skin tones. Can you make sure that your AI prediction works properly for people with darker skin tones? Also: How does your algorithm deal with a picture that also shows a scar or a port-wine stain? Are you really sure that such cases are sufficiently represented in the AI’s training data?

The challenge here is not so much to implement a specific AI standard, but basically:

“Are you familiar enough with the development of artificial intelligence to be able to demonstrably develop a safe and powerful AI model?”

And even if you have the expertise, it is not easy to obtain medical data for training your AI, especially in Germany. So the second big relevant question is:

“Do you have enough high-quality training data for your use case?”

2.1 Requirements for the development of AI medical devices

As described above, there is currently no harmonized standard for MDR medical devices that defines specific requirements for the development of AI devices. However, there are already standards and guidelines that you can use as a guide to cover the important aspects.

We have compiled a sample list of questions to illustrate the technical complexity. To ensure that your AI does not end up making the wrong disease diagnoses or giving dangerous treatment instructions, you should consider the following aspects, for example:

| Question | Example of a potential problem |

| Where does the training data for your AI come from? | Your training data is from a source for which you cannot check the validity of the data. The data does not adequately represent the real world and your AI is faulty in its application. |

| Does the training data adequately represent your use case and the target patient group? | Your training data covers just one of many application contexts. The AI only works in one context and fails in other situations. |

| How do you define “ground truth” in the context of your machine learning model? Where does the labeling data for your model come from and can you trust the truth of these labels? | The labels for your training data were assigned by a single person based on a subjective assessment. Other people would have assigned different labels for the same data. The labels do not represent a fundamental truth. |

| Has your model been validated with data that was not previously used for training and testing? | Your AI was validated with the same data that was used in training and testing. The validation is therefore successful for the training data, but the AI is incorrect for completely new data in live operation of the product (also known as “overfitting”). |

| Have you sufficiently characterized and narrowed down the target patient group and target usage environment?

|

Your training data covers just one of many areas of application for your AI product. The AI therefore only works in the scenarios for which it has been trained, but not in other scenarios that are part of the real application area. |

| How does your model react to data that it did not see during training, especially rare or atypical cases? | Your AI could make incorrect diagnoses or suggest unsuitable therapies for rare diseases or atypical patient data because it has not been adequately trained for such cases. |

| How robust is your model against manipulation or external attacks, especially when it comes to the security of patient data? | Vulnerabilities to cyberattacks could not only affect the functionality of your AI, but also put sensitive patient information at risk. |

| Have you used methods that make it possible to explain and understand the decisions of your AI? | Without explainability, it could be difficult to understand how the AI arrived at a particular diagnosis or treatment recommendation. Your AI model could be faulty without you knowing about it. |

| Does your data contain all the necessary features and can you justify the selection of the observed features? | Your training data does not contain all the relevant characteristics that are necessary for a precise diagnosis or therapy recommendation. |

| Etc. | And so on. |

These are just a few examples to illustrate the complexity and the list could easily be extended by many more points. A good list of relevant questions can also be found in the new questionnaire for notified bodies on the topic of artificial intelligence in medical devices. This leads us to the next point:

2.2 What does the notified body expect in relation to AI-based medical devices?

It is worth taking a look at the current list of questions for notified bodies for AI-based medical devices.

You can download the current versions here: Questionnaire of the notified body

(Tip: Get yourself a coffee and wait a few minutes for the download to start. It seems that the IG-NB servers are constantly overloaded).

Questionnaire of the notified body on artificial intelligence

Questionnaire of the notified body on artificial intelligence

You should expect to be confronted with such questions from the notified body during the audit of your medical device. You need a conclusive answer that can also be found in the technical documentation for your medical device.

If you develop an AI software product that falls under risk class I according to the MDR, you do not need to undergo an audit by the notified body. However, you will be audited by your supervisory authority at unannounced intervals. Here, too, it is worth taking a look at the questionnaire.

Do you need support with the development of a medical AI system?

We develop medical AI systems for companies in the healthcare sector on a contract basis.

Our AI services:

– Technical review of medical AI systems

– Development of new machine learning models in the healthcare sector

– Individual advice on regulatory compliance for your planned AI-based product

2.3 Relevant standards and guidelines for artificial intelligence in medical devices

As mentioned above, do not expect any specific new legal obligations for AI products under the MDR for the time being.

Provocatively said: If you meticulously adhere to these classic standards for the development of medical software, you don’t need an AI standard.

The practical implications of the MDR for the implementation of AI arise indirectly from, among other things:

- … the control measures for AI risks to human health (ISO 14971).

- … the mandatory verification and validation of the AI system (ISO 13485, IEC 62304).

In practice, however, it is advisable to deal with certain AI-specific standards and guidelines for the following reasons:

- Assistance for development: The development of reliable AI algorithms is complex. If you are not an absolute expert in this area, a guide will help you to identify and effectively avert all the technical risks of AI.

- Scope for interpretation of the MDR and medical device standards: Different inspection bodies have different interpretations of the MDR requirements and standards. A look at the questionnaire of the notified bodies will help you to better understand the expectations of your future auditor.

- Basis for argumentation in the audit: Your auditor will want to know how you ensure that your AI works reliably. If you answer “I’m an AI expert and I just know this”, this is a much less satisfactory answer for auditors than “We have adhered to the following standards and guidelines during development and validation”. Public acceptance of a standard or guideline creates trust in communication with the auditor.

Some AI-specific guidelines and standards that may be relevant to you in this context include the following:

- FDA guidance on the use of machine learning in Software as a Medical (SaMD):

- Questionnaire of the notified body

- Guide to AI in medical devices by Christian Johner, Christoph Molnar et al.

- BS/AAMI 34971:2023, Application of ISO 14971 to machine learning in artificial intelligence

- ISO/IEC TR 24028:2020, Edition 1.0 – Information technology – Artificial intelligence – Overview of trustworthiness in artificial intelligence

- ISO/IEC TS 4213:2022, Information technology – Artificial intelligence – Assessment of machine learning classification performance

- ISO/IEC FDIS 23894 Information technology — Artificial intelligence — Guidance on risk management

- ISO/IEC 5338 Information technology — Artificial intelligence — AI system life cycle processes

- ISO/IEC FDIS 5339 Information Technology – Artificial Intelligence – Guidance for AI applications

- ISO/IEC DIS 5259-2 – Artificial intelligence — Data quality for analytics and machine learning (ML) — Part 2: Data quality measures

- ISO/IEC DIS 5259-3 – Artificial intelligence — Data quality for analytics and machine learning (ML) — Part 3: Data quality management requirements and guidelines

- ISO/IEC 5259-4 – Artificial intelligence — Data quality for analytics and machine learning (ML) — Part 4: Data quality process framework

We hope that a standard (or several) for the development of AI-based medical devices will be established and harmonized in the near future. An established standard helps both the companies during development and the notified bodies during auditing. However, it is not yet possible to predict when this will happen.

2.4 Which machine learning models can be certified as medical devices?

Even the notified bodies seem to have difficulty answering this question. An earlier version of the questionnaire for notified bodies (version 4, dated 09.06.2022) still explicitly stated that

- “Static locked AI” is certifiable (products with a completed learning process and comprehensible decisions)

- “Static black box AI” can be certified in individual cases (products with a completed learning process and incomprehensible or only partially comprehensible decisions)

- “Continuous learning AI” is not certifiable (products whose learning process continues in the field)

In the current version of the questionnaire (version 5, dated 15.12.2023), however, this clear statement appears to have been weakened. For the latter in particular, there are now possibilities for certification, provided that suitable measures have been established by the manufacturer.

“Practice has shown that it is difficult for manufacturers to sufficiently prove the conformity for AI

devices, which update the underlying models using in-field self-learning mechanisms. Notified bodies do not consider medical devices based on these models to be “certifiable” unless the manufacturer takes measures to ensure the safe operation of the device within the scope of the validation described in the technical documentation.”

What exactly these “measures to ensure the safe operation of the product […]” are in practice, however, remains open. Even though the original statement that certification of “Continuous learning AI” is impossible has been removed, it is still not clearly defined in which cases an exception can be made. In practice, this is also strongly related to the risk class of the product. Depending on the extent of the potential damage in the event of misconduct by the AI, residual risks may or may not be accepted by self-learning systems.

Machine learning model: Static vs. adaptive (or “self-learning”)

If you are planning to develop a continuous learning system, you should definitely define the extent to which the algorithm is allowed to continue learning in the field.

Example: As part of risk management, it is also necessary to analyze what kind of risk control measures could be introduced. With an insulin pump, for example, you could say that the minimum and maximum insulin doses are fixed and only the optimization in between is based on AI.

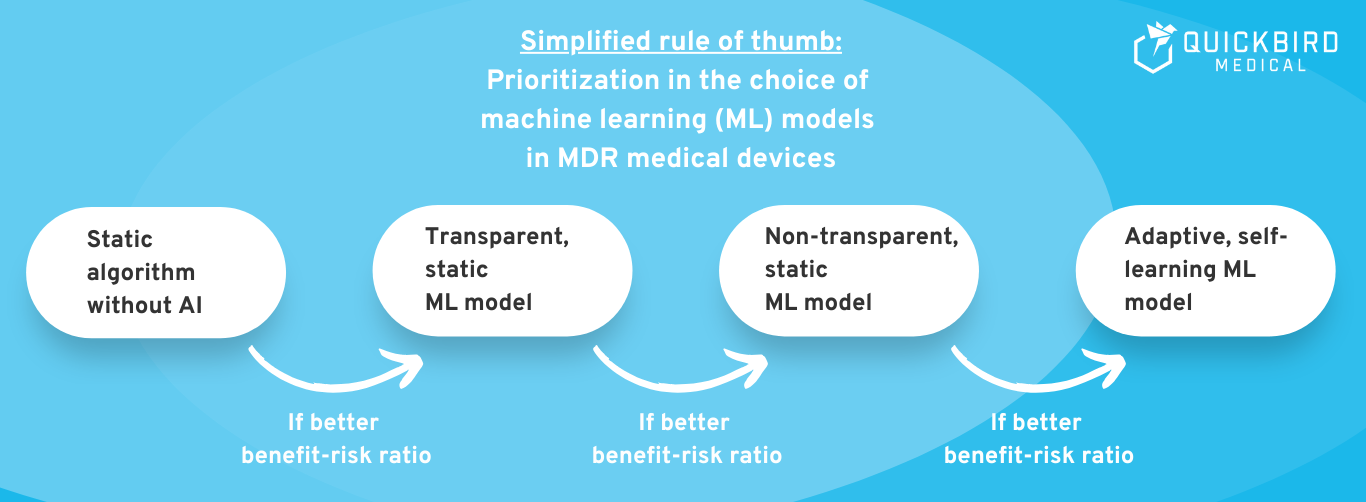

Rule of thumb: As a general rule, a simpler (more interpretable) ML model is preferable to a “black box”, and static AI is preferable to a self-learning AI system. If necessary, discuss your plan with your notified body (for risk class IIa or higher). The scope for interpretation of the legislation is wide and you must therefore expect different expectations from different auditors.

Image: Highly simplified rule of thumb: Which type of AI should you choose?

2.5 Can you use LLMs such as ChatGPT in your medical device according to MDR?

Generative AI and especially Large Language Models (LLMs) have great potential in medicine. The currently best-known LLMs include, for example:

- GPT-4 from OpenAI (used in ChatGPT)

- Llama 2 from Meta

- Claude 2 from Anthropic

- Especially in the medical field: e.g. Med-PaLM from Google

The big advantage of these LLMs:

- These are pre-trained models. You do not have to train the AI model yourself and therefore do not need any training data.

- The capabilities of these pre-trained models are diverse. The use of LLMs enables many innovations in medicine that were previously unimaginable.

Can you use generative AI and especially LLMs in your medical device from a regulatory perspective?

From a regulatory perspective, there are no explicit restrictions here. You can potentially use any machine learning model as long as you ensure that the product is safe and fulfills its medical purpose.

Unfortunately, LLMs are not yet reliable enough for many medical applications (keyword: “hallucinations”). This results in an unacceptable risk for your medical device according to MDR in many areas of application. For less critical areas of application, however, it is not explicitly ruled out that you integrate an LLM into your medical device. This must be considered on a case-by-case basis.

In the future, LLMs will certainly be continuously improved, which will open up many new areas of application in medicine.

2.6 Costs and effort for approval

Factor 1: Risks of your medical device

How precise you need to be when developing and validating your AI model depends heavily on the level of risk of your medical device software. The higher the risk of your product, the more complex and cost-intensive the approval process will be.

💡 Example of a high risk:

Suppose you are developing an AI algorithm to calculate the optimal radiation dose for an irradiation device. If your AI system makes a mistake, the cancer patient can die from a radiation overdose in the worst case. Accordingly, you need to invest a lot of effort in the development and validation of your medical device software in order to reduce the probability of errors to almost zero.

💡 Example of a low risk:

Suppose you are developing a digital health application for the treatment of obesity or overweight. Your AI system is now supposed to suggest the optimal fitness exercise depending on the time of day and the user’s mood. If your algorithm makes a less than optimal recommendation from time to time, no one is directly harmed. Accordingly, you have the option of accepting a certain error rate as a residual risk when developing the AI system. The effort required to develop and validate the system is manageable.

Rule of thumb:

You need significantly more data and have to invest more time in the development of your AI model if your product is in a higher risk class according to MDR and a higher safety class according to IEC 62304.

Factor 2: Benefits of your medical device

According to the MDR, a medical device must always compare the risks with the benefits. A novel medical device with high benefits can therefore also accept greater risks under certain circumstances.

An AI product with rare errors that saves significantly more human lives through its use than by not using the medical device can potentially still be approved.

However, if your product would work just as reliably with classic, rule-based algorithms, you should steer clear of AI. You have a duty to reduce all risks associated with your medical device as far as possible. Since AI creates many new risks, a simple, transparent algorithm is preferable in case of doubt.

3. Additional regulatory requirements for AI

In addition to the Medical Device Regulation (MDR), you may also be affected by other legal requirements:

- General Data Protection Regulation (GDPR): Although the GDPR does not specifically address AI in its wording, it certainly has implications for it. The most important implications of the GDPR arise, for example, from the requirement of “technical and organizational measures” (TOM) for the protection of personal data. In terms of cyber security, for example, there are special challenges for AI systems.

- Artificial Intelligence Act (AI Act) of the European Union: The AI Act regulates the use of AI within the European Union. As the EU-AI Act is not a regulation specifically for medical devices and an explanation of it would go beyond the scope of this article, we will not go into this new regulation in detail in this article. Here you will find a detailed guide to the AI Act specifically for medical device manufacturers.

In this guide, we will limit ourselves to the legislation that can have a major impact on AI in particular. Of course, there are many other laws (e.g. TMG, BDSG etc.) that apply to every software product.

4. Conclusion

Artificial intelligence is of course also eligible for approval for regulated medical devices under the MDR. How much effort and cost is involved depends on the risk and benefit of your medical device software.

Basically, you should consider whether the use of artificial intelligence makes your product significantly better in terms of its intended purpose. If you can achieve similar results with a transparent, rule-based algorithm, you may want to do without AI. It may not be worth the effort for your company and the notified body may also question the decision to use an AI-based system.

However, if your AI algorithm creates added value that cannot be achieved with traditional algorithms, then we would like to encourage you at this point.

As of July 2023, 692 medical AI systems have already been approved in the USA and new companies in Europe are also regularly receiving approval for their AI systems in accordance with the MDR. AI in radiology, for example, is no longer a novelty and achieves things in this field that would simply be impossible with rule-based algorithms.

Do you need support with the development of a medical AI system?

We develop medical AI systems for companies in the healthcare sector on a contract basis.

Our AI services:

– Technical review of medical AI systems

– Development of new machine learning models in the healthcare sector

– Individual advice on regulatory compliance for your planned AI-based product

Whether as a function within digital health applications (DiGA) or as decision support systems for doctors, we develop suitable machine learning models for your use case. If you are planning an AI-based medical device, please do not hesitate to contact us. We advise you individually on your planned product and, if required, implement the entire software system for you.